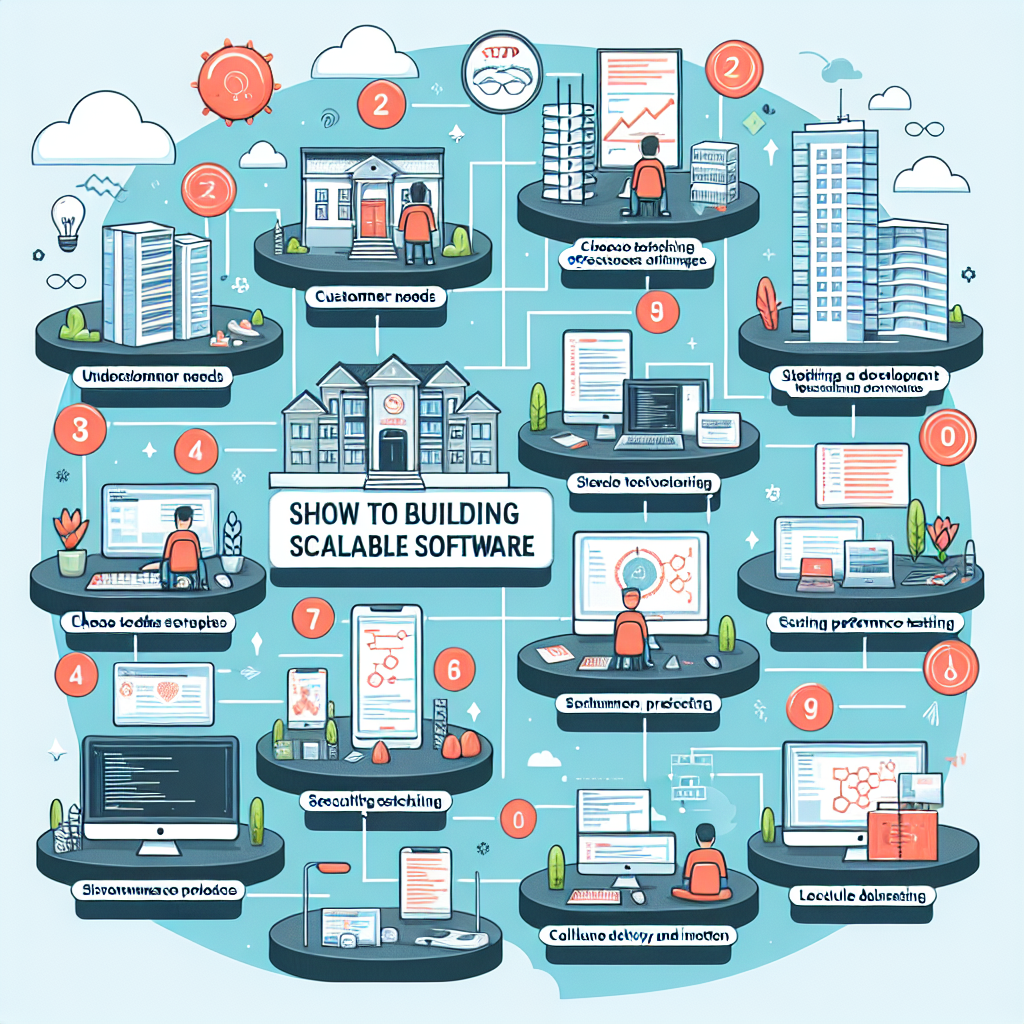

Top 4 Semantic Keyword Phrases

- Designing Scalable Architecture

- Implementing Efficient Algorithms

- Leveraging cloud computing platforms

- Ensuring Robust Security Measures

Designing Scalable Architecture

Understanding the Basics of Scalable Architecture

When it comes to building scalable software, the architecture is the backbone. Think of it like the foundation of a house – if it’s solid, you can keep expanding without fear of collapse. A well-thought-out architecture will save you from loads of headaches later on. It’s really about planning for the future from day one.

The essence of scalable architecture is ensuring that your software can handle increased loads without compromising performance. Imagine driving on a bustling highway; a scalable design would be akin to having ample lanes ready for peak traffic. My personal take? Invest time upfront to save a ton later.

Exploring different architectural patterns is crucial. One size doesn’t fit all, and you need to choose what’s best for your project. From microservices to serverless computing, each has its advantages. Your choice will hugely influence your software’s scalability.

Choosing the Right Tools and Technologies

Let’s get practical – tools and tech are your extra hands and brains. The right choice can make your job so much easier. When selecting tools, look for those specifically designed to support scalability, like containerization software such as Docker or orchestration solutions like Kubernetes.

In my experience, embracing modern technologies is a game-changer. Not just the buzzwords, but tools that have proven their mettle. Opt for databases that support sharding like MongoDB or distributed ones like Cassandra. Beyond the tech, it’s about creating an ecosystem that sings in harmony for your project.

Also, don’t underestimate the importance of keeping up with technological advancements. Technology is ever-evolving, and staying updated helps ensure your software doesn’t become obsolete with time. Join communities, attend webinars, and continuously upskill to keep your software future-proof.

Decoupling and Cohesion In Design

A poorly designed system can become your worst nightmare as your software scales. By aiming for decoupled systems, you achieve better scalability. It’s like owning a modular home that allows you to add more rooms as needed, without altering the existing structure.

I’ve found that system cohesiveness is equally important. It’s all about ensuring your system components can communicate seamlessly with each other, which is crucial when performance becomes paramount. Cohesion ensures that, while decoupled, the units still work in sync.

Realistically, striking the right balance between decoupling and cohesion is tricky but rewarding. You’ll gain a resilient system that can grow without causing dependency nightmares. And trust me, it’s a feeling akin to playing Jenga and never having the stack tumble down.

Implementing Efficient Algorithms

Choosing the Right Algorithms

An algorithm is like the logic that drives your software. Pick the right one, and your software becomes swift and responsive; pick the wrong one, and things bog down. We need algorithms that scale well with an increased volume of data and users.

Consider the use of sorting and searching algorithms that have proven efficiencies. Depending on your needs, popular choices include quicksort, mergesort, or binary search algorithms. Each serves a different purpose, and your choice can significantly impact scalability.

From my own work, I’ve seen how applying the wrong algorithms can bottleneck scaling efforts. It’s crucial to analyze the algorithmic complexity and performance, aiming for efficiency that will stay intact as your software and customer base grow.

Optimizing Performance through Algorithm Design

Once you have the right algorithms, the next step is optimization. Just like tuning a car for the race track, well-designed algorithms can greatly enhance software performance. The key here is refinement – making sure no processing power is wasted.

Redundancy is another area of focus. Make algorithms run smoothly by minimizing redundancy, ensuring they perform only the necessary computations. This approach often involves testing and iterating until you discover the sweet spot of operation.

You’d be amazed how much subtle adjustments can save resources. It’s really about precision. Small changes can cumulatively have a big impact on efficiency, helping your software remain fast and responsive as it scales up.

Caching and PreComputation Tactics

Caching is your best buddy for efficiency. It helps by storing recurring computations in memory for faster retrieval. Think of it like cutting the line at a concert – you get to experience everything faster without redundant checks.

Precomputation can also enhance efficiency dramatically. By doing intensive data processing ahead of time, you free up real-time operations. This approach can be priceless, especially in environments where response time is crucial.

From my journey, mastering caching strategies and precomputing has been essential in making software perform at lightning speed. They reduce the load on processors and databases, allowing the software to handle more users seamlessly.

Leveraging Cloud Computing Platforms

Understanding Cloud Scalability Options

Cloud platforms have fundamentally reshaped the scalability landscape. They offer unmatched flexibility, allowing you to scale resources up or down according to demand, often without skipping a beat. It’s akin to having magic at your fingertips.

A variety of cloud service models such as Infrastructure as a Service (IaaS) and Platform as a Service (PaaS) cater to different needs. I’ve personally benefited from the adaptability and cost-effectiveness these models offer. They provide the capacity to manage substantial loads without investing heavily in physical infrastructure.

If scalability is your mission, the cloud is your ally. Its pay-as-you-use model is beneficial for managing expenses, making it a perfect match for dynamic scaling needs. This ease of scalability is why cloud computing takes the spotlight in modern software architecture strategies.

Choosing the Right Cloud Provider

Not all cloud providers are created equal, which makes selecting the right one crucial. Gartner’s magic quadrant reviews and peer feedback are great starting points for evaluating providers. AWS, Google Cloud, and Microsoft Azure offer diverse features that align with scalable software needs.

Consider factors such as server locations, pricing models, and services offered when choosing a provider. Analyzing your software requirements against provider features ensures you say yes to the best fit. The right choice can make all the difference in seamless and cost-effective scaling.

In my projects, aligning the choice with strategic goals has helped achieve desired outcomes. It’s a partnership, really – finding the provider that not only meets your needs today but can also grow with you in the future.

Utilizing Cloud Automation Tools

Automation is the heart of cloud scalability. Tools like Terraform or Ansible help automate resource deployment and scaling, putting efficiency on autopilot. They reduce the manual workload, meaning fewer opportunities for human error.

In practical terms, automation enables your infrastructure to handle variability in demand without intervention. You’re effectively setting provisioning rules and letting the system take over. I love how it trims the operational fat, freeing up time to focus on core development.

By leveraging automation, you can create self-healing systems that react to demands in real-time. It’s a wizardry that removes many of the hassles associated with scaling, offering peace of mind as your user base grows steadily.

Ensuring Robust Security Measures

Defining Security Policies and Procedures

Security often gets overshadowed in the hustle towards scalability but make no mistake, it’s paramount. Defining robust security policies is your first line of defense. It’s like putting locks on all possible entry points to a house.

Security policies should cover data encryption, access controls, and incident response strategies. They offer guidelines for protecting data integrity and ensuring business continuity. From where I stand, having consistent and clear policies is a non-negotiable step in securing scalable software.

These policies also need periodic review to adapt to evolving threats. Just like changing locks periodically, keeping up with security trends and potential vulnerabilities ensures your software stays protected against malicious activities.

Implementing Security Best Practices

Beyond policies, actual practices make security real. Implementing measures such as multi-factor authentication, least-privilege access, and regular audits fortifies your software’s defense. These steps are like setting up barriers to safeguard a fort.

Security is never a one-time effort. Regular updates and patches ensure that your software is shielded from new vulnerabilities. My belief is that prevention is always better than a cure. Investing in security practices can save you costly breaches and downtime.

Additionally, keep your entire team informed. Security is everybody’s responsibility. By developing awareness and preparedness for security threats, you build a proactive defense system that contributes to robust, scalable software.

Monitoring and Incident Management

Even with the best defenses, breaches can happen. Having monitoring systems in place is crucial for early detection of potential threats. These systems act like watchtowers alerting you to suspicious activities.

Establish an effective incident management process. Define roles and responsibilities, ensuring quick response and mitigation in the event of a security breach. It’s like conducting fire drills – when an actual fire occurs, everyone knows what to do.

In my journey, proactive monitoring and incident management have been lifesavers. Minimizing downtime and reducing impact are achievable when you have a set plan ready to go. It’s peace of mind in knowing that despite eventualities, you’re prepared.

FAQ

What is scalable software architecture?

Scalable software architecture is a design approach that allows the software to handle increasing amounts of work efficiently. It involves planning and designing systems that can grow to accommodate more users, data, and transactions without sacrificing performance. It’s about future-proofing your system from day one.

How does cloud computing help with scalability?

Cloud computing provides resources on demand, making it easy to scale applications up or down based on current needs. It offers flexibility and cost-effectiveness, removing the need for upfront investment in physical infrastructure. Cloud services like AWS and Azure offer automatic scaling features that help manage increased loads seamlessly.

What are efficient algorithms, and why are they important?

Efficient algorithms are step-by-step procedures or formulas for solving problems that use the least amount of resources. They are crucial for scalable software as they improve speed and responsiveness, even as your software handles larger data volumes or more users.

How can I ensure my software is secure as it scales?

To ensure security as your software scales, develop robust security policies, adopt security best practices like multi-factor authentication, and implement regular monitoring and incident management systems. These measures help protect against potential threats and ensure the safety of your data and software environments.